Despite all the planning and painstaking development involved in creating a microservices application, microservices can and will fail. It’s up to development teams to create applications that can handle failure gracefully. While the application ultimately dictates the right approach for resiliency, a common theme among these approaches is using microservices resiliency patterns.

In our guide to microservices resiliency patterns, we look at how to predict application failure points, and the resiliency patterns you can use to help prevent cascading and otherwise catastrophic microservices application failures.

Creating Resilient Microservices Applications

For developers, it’s no longer a matter of “if” or “when” something will go wrong — that’s a given — but rather how to architect for automatic self-healing and application resiliency. With enough services and load on the system, the application will always be in a state of partial failure and recovery due to hardware issues, networking glitches, virtual machine crashes, bursty traffic causing timeouts, and the like.

But how do developers approach microservice resiliency? For some, that may mean maintaining full functionality and adequate performance during service failures. For others, it may mean just preventing one failure from causing cascading failure of the application. How developers and application architects prevent those failures varies from project to project. But the core concept of application resiliency, and the resiliency patterns and techniques used to prevent these failures, should be a major topic of discussion for every development team.

Back to topWhat Are Resilience Patterns?

Resiliency patterns are a type of service architecture that help to prevent cascading failures and to preserve functionality in the event of service failure.

Common resiliency patterns used in application development include the bulkhead pattern and circuit breaker pattern. These patterns often incorporate other resiliency techniques like fallback, retry, and timeout.

Back to topPredicting Resilience Issues in Microservices

Many of the performance issues and fixes we’ve looked at in previous articles can be looked at as band-aids for performance problems.

Just because you've optimized requests from one service to another service doesn’t mean you’ve addressed the underlying architectural issue within the application.

As Mark Richards points out in his book, Finding Structural Decay in Architectures, prevalent enough issues — like unintended static coupling between services — can be indicative of a larger architectural mismatch.

Because many, if not most, microservices applications are transitioned from existing monolithic applications, there are bound to be a few cases where making that transition was the wrong decision.

Learn More About Microservices

Dive deeper into microservices and Java development through our comprehensive resource center filled with videoes, articles, and more.

Identifying Failure Points

If you have been troubleshooting your application and individual service performance, you have already likely identified a few services that either receive or send a lot of requests.

Optimizing those requests is important and can help to prolong availability. But, given a high enough load, the services sending or receiving those requests are likely failure points for your application.

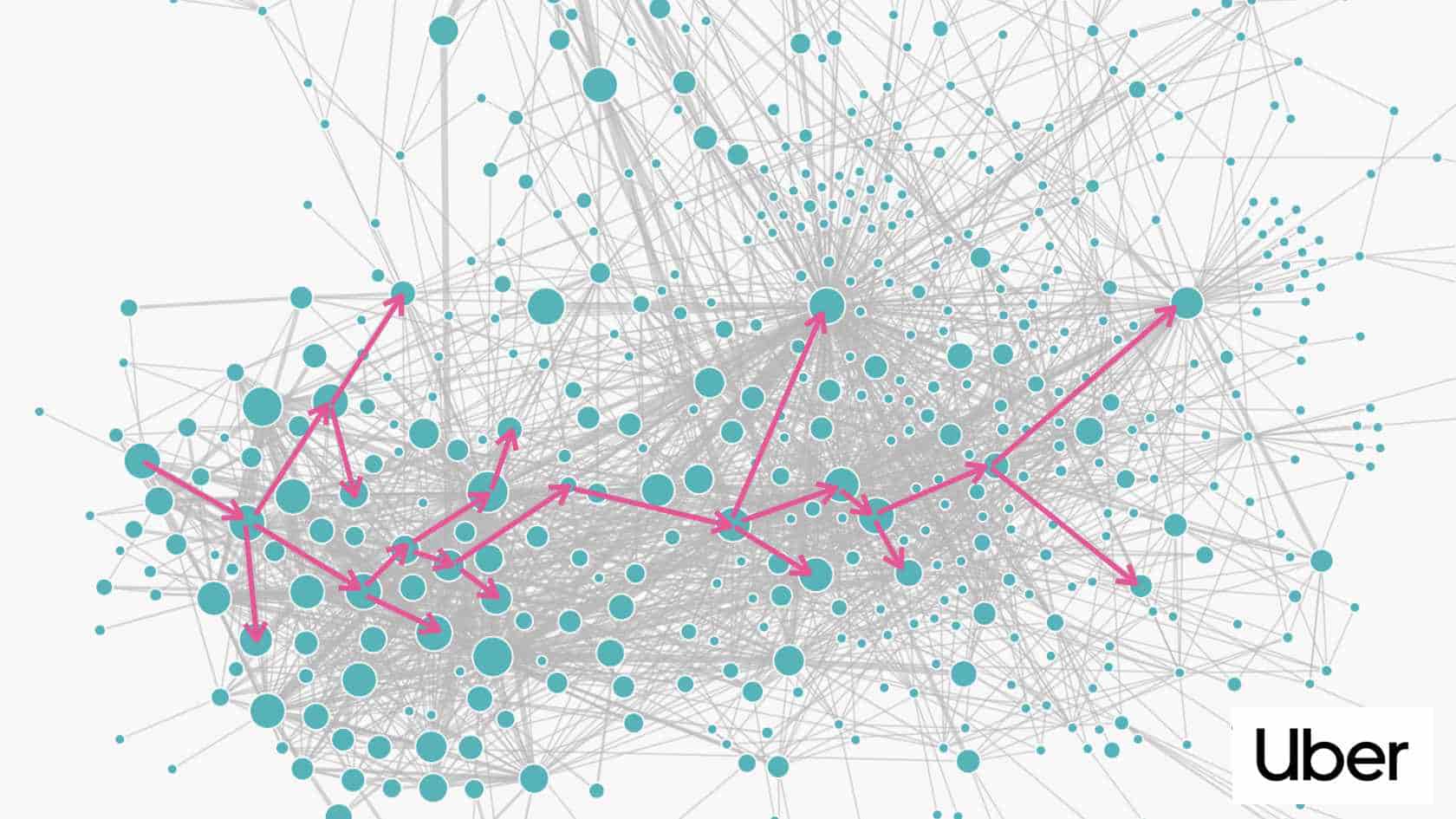

For enterprise microservices applications like Uber, where engineers are using thousands upon thousands of microservices, tracing requests across these services can be hopelessly complex – with traces that have hundreds of thousands of spans.

Using visualization tools to make sense of those complex traces and how they progress through the microservices that comprise the application helps engineers to identify and bolster application failure points proactively instead of reactively. If you want a good overview of how Uber uses visualization techniques to manage the complexity of their microservices application, then we highly recommend this presentation on microservices complexity from Yuri Shkuro.

Back to topMicroservices Resilience Patterns

In the next section, we’ll cover three resilience patterns that can help developers to engineer failure-resistant microservices applications — the circuit breaker pattern, bulkhead pattern, and an engineering approach using stateless services. As we mentioned above, these patterns often employ other resiliency techniques like fallback, timeout, and retry.

Back to topCircuit Breaker Pattern

Microservices applications often rely on remote resources, like third-party services, as a key component of their program. But what happens when one of those remote resources times out upon request? Does your microservice continue calling that resource in an endless loop until it fulfills that request? What happens when multiple services are requesting that same remote resource?

What Is the Circuit Breaker Pattern?

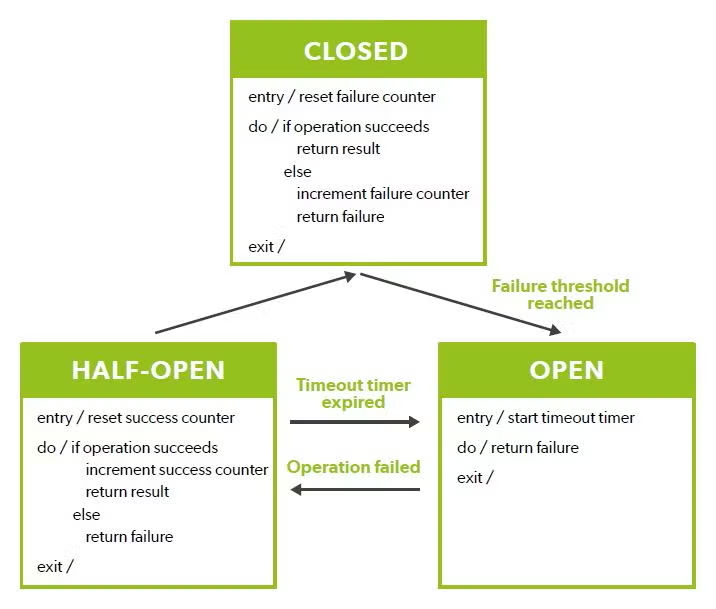

The circuit breaker pattern is an application resiliency pattern used to limit the amount of requests to a service based on configured thresholds — helping to prevent the service from being overloaded.

Additionally, by monitoring how many requests to that service have failed, a circuit breaker pattern can prevent additional requests from coming into the service for an allotted time, or until the amount of failed requests by time have reaches a certain threshold.

When recovering from failure, the circuit can return incrementally to full functionality to prevent overloading the service with a large amount of requests.

When to Use the Circuit Breaker Microservices Pattern

The circuit breaker pattern can add significant overhead to your application, so it’s best to use sparingly, and only in cases where you’re accessing a remote service or shared resource prone to failure.If you user a service mesh like Istio, it’s easy to experiment with various resiliency patterns, including Circuit Breaker, to see which techniques work best for each service.

Back to topBulkhead Pattern

When architecting microservice-based applications, it’s easy to overload specific services within the application. As we’ve outlined throughout this white paper, overloading these services can lead to slow applications at best, and catastrophic failures at

worst.

What is the Bulkhead Pattern?

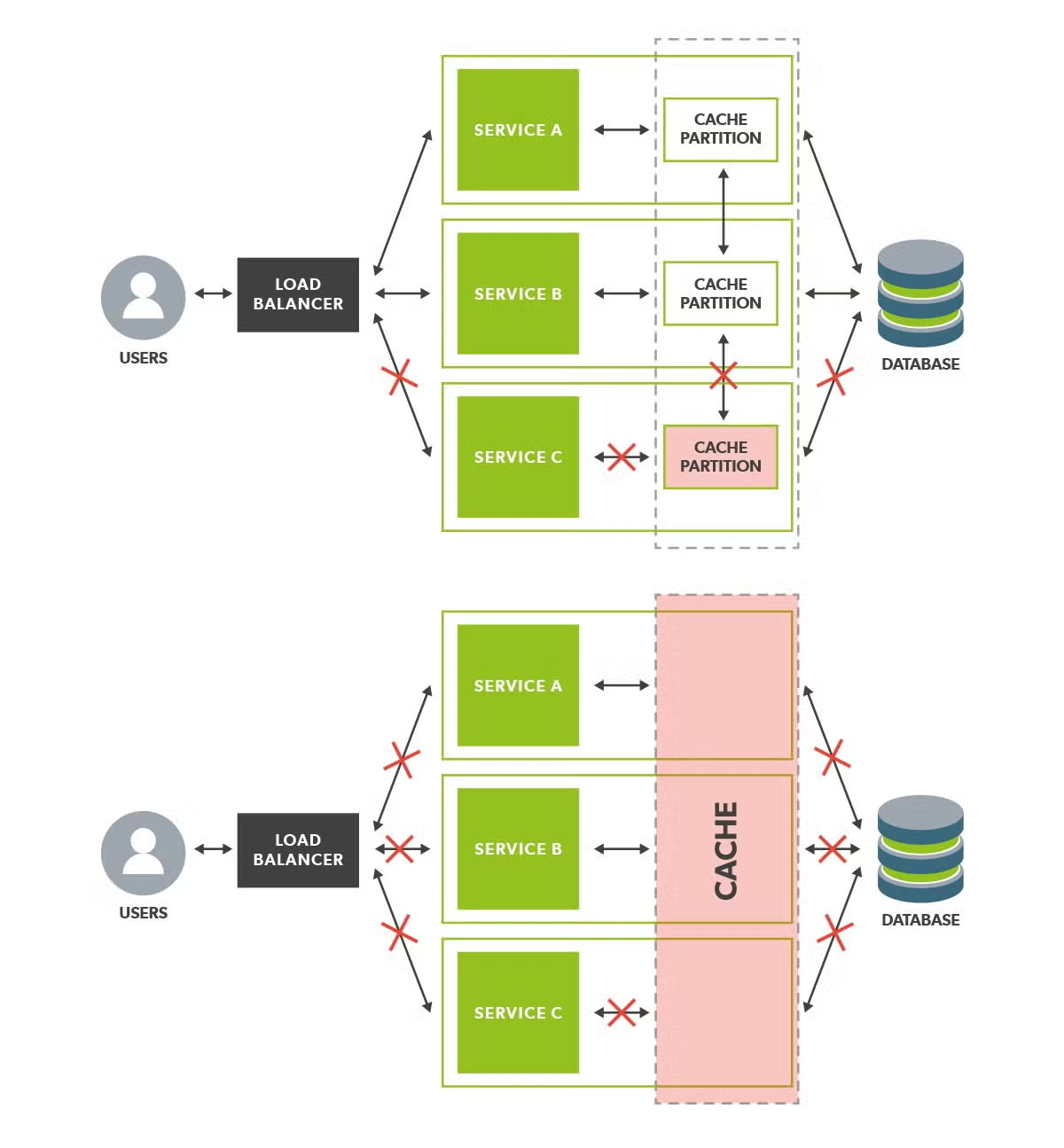

The bulkhead pattern is an application resiliency pattern that isolates services and consumers via partitions.

These bulkhead partitions are used in order to prevent cascading failures, give sliding functionality when services fail vs total failure, and to prioritize access for more important consumers and services.

Using the Bulkhead Pattern in Microservices

In the example below, we see the bulkhead pattern applied to a database cache. In the non-partitioned database cache, the failure of the cache leads to the services interacting directly with the database, leading to application failure.

But in the partitioned example, we can see that the partition for service c has failed independently, resulting in the failure of service c without immediately causing failure in the other two services.

If those cache partitions were configured as a cluster sharing the same data in each instance, then the service could be configured to access one of the remaining partitions upon failure.

Tips for Using the Bulkhead Pattern

While useful for preventing catastrophic failures, the bulkhead pattern does add complexity to your application. For teams working on services independently, understanding where each service fits within the bulkhead pattern and how their service is partitioned relative to other services is crucial to a performant application.

Additionally, keep in mind that this pattern — while great for resiliency — can add another performance hurdle for your application.

Back to topStateless Services

What happens if a service being called upon fails in your microservices application? If there isn’t an alternative database or service that can fulfill that request, additional services can fail, leading to a cascading failure of the entire application.

But what if you could have a copy of that service ready to go if the primary fails? Or another one that could be spun up on demand instantly if that second service fails?

Using Stateless Services for Microservices Resiliency

Stateless services can achieve that function. Because they depend on inputs, and don’t actually hold data, any copy of that service can serve just as well as the original.

In addition, these services can be instantiated dynamically as the need arises instead of existing permanently within the application – meaning less resource usage for the service and application.

As an alternative, you can imagine a service that needs to serve many requests for non-persistent data, but the demand is lumpy, coming in bursts.

But when those requests are issued, using asynchronous requests or request buffers can’t fulfill the requests fast enough for a good end-user experience.

An engineer could allocate a large chunk of resources to that service, add additional hardware to accommodate in case of high loads, or they could employ a stateless, scalable service that can spin up new services to fulfill requests during heavy load, using minimal extra resources and only when scaled.

Back to topAdditional Resources

Today we went over three of the most prominent microservices resiliency patterns out there. And, while you can make an argument that using stateless services isn't a resiliency pattern, per se, it's still an extremely valid resiliency tactic for microservices applications.

Looking for additional reading on developing Java microservices? We have a wealth of articles, webinars, and white papers available at our Java microservices hub.

Or, try JRebel free for 10 days to see how much time you can save by skipping redeploys.