Containerization is the bundling of application code and dependencies into a single, virtual package. A containerized application typically sits alongside other applications, and runs via a shared operating system on a computer, server, or cloud.

From change roots to the container we know today, containerization has evolved the way developers work with and develop many types of applications. In this article, we look at the basics of containerization, including a history of containerization, the benefits of a container-based application, and the top technologies for developers.

What Is a Software Container?

A software container encapsulates code and requisite dependencies into a single, replicable unit.

Software containers allow teams to run lots of containerized applications and containers in a single piece of hardware, without emulating hardware and software like you would in a virtual machine. With containers, you put minimal restriction around processes to make them think they're isolated with very low overhead. Software containers, at least conceptually, have existed since the 1980's, as we cover in the next section.

Back to topAn Abridged History of Containerization

The history of containerization begins with Chroots in the 1980s, but the term container has only been around since the inception of the LXC project. But what made containers necessary, and what led to the mainstream adoption we see today?

Let's start with a basic history of application deployment and why problems inherent to application deployment led to virtual machines, and eventually the proliferation of containers..

Before Containers

Before containers, well before containers, we had physical hardware. This is of course the only you could do it, you had to have a machine in order to run the software. You had to buy the machine, go through a procurement effort, which could be very slow. In some cases it took 6-9 months for IT to procure the new physical hardware.

You had to wait a long time, but it was also challenging to deploy the software. This is also before we had nice things like DevOps and infrastructure as code to help us. You had to guess how much capacity you needed for your application, and you would almost always guess wrong. It's too hard to get right: as it was hard to accurately account for data usage, user growth, and conflicts between the software and hardware.

📕 Related Resource: Learn more about What is Infrastructure as Code?

You could have single very large expensive machines that ran tens or hundreds of applications, or you could have lots of big machines running a single application in kind of a cluster mode. In either case, there were lots of potential issues.

Problems With Application Deployment

Deploying an application was complex, expensive, and inefficient. You had to coordinate teams, and almost everything was a one-off. There was no standard playbook on how to deploy an application. And development teams didn't have the luxury of having an open source marketplace where they could find something perfect for almost any scenario. Development teams had to invent from the ground up, and every team did it a little bit differently.

That's a lot of complexity, a lot of one-off failure cases. It was expensive, too. You had to buy different machines for your database, application server, web server, or try to get them all on the same box. If you did, then you would run into challenges with operation system configurations, tower management, co-location speeds, cooling, or even dealing with your own data center.

Inefficient Resource Utilization

The end result? Machines were dramatically underused. Development teams would often net a 2-3% average efficiency on the computing, because they would try to account for massive amounts of growth, and trying to react to dynamic, flexible, or elastic load was a slow and painful process.

Of course, you still wanted your application to be available during spike periods, so you would have horribly underutilized hardware the rest of the time. That meant a lot of wasted money on resources that weren't getting used.

Conflicts

For teams who wanted to become more efficient, putting a lot of applications on the same machine was the logical next step. But that meant that other application on that shared machine could easily spike and starve out other applications of their access to CPU, memory, disk, and network resources.

Introduction of Virtual Machines

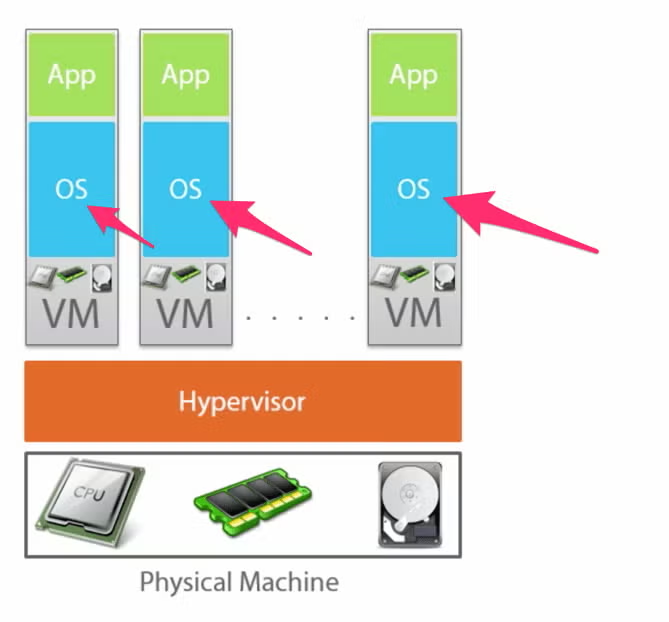

The solution to that problem was the virtual machine. The idea being that instead of having all these applications run on one visible install in a hardware environment, running on one operating system, teams emulate the hardware.

That means you have different virtual machines where each of them can have dedicated operating systems, dedicated slice of the machine resources. This solved a lot of the dependency issues and the problems with conflict in the hardware versions or operating system versions and dependencies. It also helped to better utilize servers, and gave better isolation between applications.

Virtual Machines Leave Room for Improvement

Although virtualization helped solve a number of problems, it still has one big issue — teams still need to run full operating systems for every application. Then you have the overhead of emulating all the hardware in the machine in software, which slows things down.

You also have issues with trying to predict how many resources each application needs, and again you partition those off so that again you could have certain applications using a small percentage of what they've been allocated, and others that are maxing out the virtual machine and causing issues for their users.

Maybe, you could run more applications in the machine, but not as many as you would like. You've got a lot of overhead. There's a lot of weight going on with a pure virtual environment.

Introduction and Evolution of Container Technologies

People think that containers started with Docker fairly recently, but they've been around for 40 years. If you think of Chroots introduced in BSD in early 1980s and BSD jails and Chroots jails, lots of different technologies kind of evolved overtime.

In the early 2000's, container technologies became more powerful. They start providing more isolation, and start inching toward accessibility. Things like VServer, Solaris Zones brought this concept to a lot of people.

With process containers, cgroups, you get more control, isolation, and more security a little bit more isolation, a little bit more control, a little bit more security, a little bit more accessibility. Then comes the LXC project, which paves the way for Docker.

The Docker Era

With the introduction of Docker, containers were made much more accessible. From a developer perspective, the user experience and system were a big, positive change for containerization. As with a lot of technologies, it's user experience that makes them thrive and succeed, not necessarily the underlying technology itself.

When we got to ease of use, that's when everybody wanted on board, and when everybody jumps on board, you get more resources and more funding. That funding brings improvement to fundamental aspects of the technology, like security, isolation, making it cloud-ready and etc. Then, with an increase in adoption you get more extensions developed for it, more images developed and shared, and more support available if you run into problems.

Back to topBenefits of Containerization

As noted above, containerization solved some big issues in deployment. But containers also provide benefits that go beyond deployment, including an immense capacity for standardization and automation, while making it possible to work across languages and technologies.

| Benefit | Description |

|---|---|

| Developer-Friendly | Containerization and container orchestration technologies are now in the mainstream, and have deep, well-funded integrations with technologies that span language ecosystems. |

| High-Performance | Containers put applications closer to hardware, provide less abstraction, and allow for better distribution of resources across the system. |

| Standardized | Maintained uniformity means containers can be continuously developed, tested, and integrated without concerns for variations within those environments. |

| Automatable | Because containers are standardized, it's easier to automate processes within the development pipeline, making the development process faster. |

| Technology-Agnostic | Containers are technology and language agnostic, meaning they can work regardless of the underlying operating system and physical server configuration. |

Top Containerization Technologies

As we discussed above, containers are hardly mentioned without Docker these days. But you also won't hear Docker mentioned very often without Kubernetes. But why are Docker and Kubernetes the top containerization and container-orchestration technologies today? What benefits do they have that explain such widespread adoption? Let's take a closer look.

Docker

What do we get from things like Docker? At the most basic level, uniformity. Docker containers are meant to be all the same, allowing you to package it once, and deploy anywhere.

What's in the package is up to you, and that's kind of an implementation detail, but the package itself, the container in the image, can be opened, inspected, executed in lots of different environments and operating systems.

Accessible and Easy to Use

The Docker sort of tool kit makes it easier for developers to use. They're not digging into the guts of cgroups, or get on with recompiling. They're getting that functionality out of the box. It's easy to use, and doesn't leave a lot of sharp edges. You just look at tutorials and five minutes later you're up and running.

As mentioned earlier, the improved user experience over other containerization technologies really set Docker apart when it came on the scene. There are some competitors, of course, but Docker has bubbled to the top and gets the most attention and most deployment.

Language and Technology Agnostic

Because containers are language and technology agnostic, you can use any type of code you want, regardless of language. That makes it easy to use the framework, tools, and technologies that best fit the needs of your application instead of making something work that isn't a perfect fit.

Well-Populated Marketplace

One reason for Docker's popularity is that it has a well-used and frequented image marketplace. With package managers, developers can quickly Developers get used to have package managers for their code, so you can just quickly say, "What are you in?", using Java, or node, or C#, or lots of languages today, Rust, and Python, Ruby, you name it. They've got standard package managers where you can do a quick search, find what you need and with one or two quick command line statements, you're up and running, it has pulled down the package and its dependencies. It's ready to go when you use.

Docker kind of gives you the same abstraction around higher level of component, in this case pre-configured processes, it would be networking passwords, basic configurations so that you can for example get a database, or an application server, or a web server. That's the level of abstraction you're dealing with. That's just as easily as you can use a package manager. Fast and easy developer UX, that's what it's all about. That's why it took off.

Long-Term Outlook

Docker is certainly the industry standard for containerization right now, but there are many other container technologies out there, including CoreOS, rkt, Containerd, LXC, and Mesos. Will those technologies reach the adoption level of Docker?

Right now it seems unlikely. Docker isn't a one size fits all solution, obviously, but it has become well adopted. That widespread adoption means it gets more investment from large companies, further solidifying its hold on the container technology market.

Kubernetes

Kubernetes kind of fits on top of Docker, because you have your containers, but primarily Docker is what we see in the enterprise, and let's you extend kind of the abstraction from one process, one server component typically for Docker to a whole set of them, kind of like a distributed application in a box. That means your web server, app server, database, or your collection of microservices can fit together under one umbrella.

With Kubernetes, you can more easily manage that collection of containers, unlocking the real benefit of microservices -- scaling, monitoring, self-repair, and do it without worrying about how to do it.

Automated Scaling, Monitoring, Self-Repair

Kubernetes gives you basic load balancing, and autoscaling. That means processes like scaling up, scaling down, service monitoring, and service repair are all happening without direct human interaction.

A Data Center in a Box

At the beginning of the article we mentioned data centers, and how involved they were from a deployment perspective. Kubernetes container orchestration allows for what's essentially a mini data center in a box. This allows you to define all your networking, compute, persistence, and scaling at the developer and system administrator level and have Docker and Kubernetes take you from point A to point B instead of dealing with all the details needed to make that journey yourself.

Back to topFinal Thoughts

There are obviously pros and cons for Java developers working in an enterprise environment, and it's important to go in with eyes wide open. As mentioned earlier, containers aren't the right fit for every application, and making a move to a containerized application architecture can be time-consuming and complicated. If you're making the move, don't set tight deadlines before you get to production. Make sure you have time to experiment and get it right. It takes time to get that experience, and it's easy to get it wrong.

Additional Resources

If you're considering containerization, be sure to check out the webinar below from Rod Cope. It covers the strategies and processes that development teams need to consider before transitioning or creating a full microservices application.

Looking for additional insights into containers, Java container technologies, and microservices? The resources below are a great place to get started.

- Resource Collection: Exploring Java Microservices

- White Paper: Developer's Guide to Microservices Performance

- On-Demand Webinar: Engineering for Microservices Resiliency

- Blog: Docker Microservices in Java

- Blog: Kubernetes vs. Docker Swarm

If you're looking for a first-class microservices development experience, be sure to check out JRebel. It makes it easier to develop microservices by skipping time-wasting redeploys. See for yourself with a personalized demo with one of our engineers today.